May 30, 2022

AI for SEO: learn which keywords are similar, without labelling data

Intent classification

Product-led SEO teams are often interested in how to use AI for SEO, and many are enthralled by intent classification. Intent uses AI to understand how similar the meaning of two keywords is. Specifically, intent classification is a kind of AI tool to understand the implicit expectations about the types of pages which answer a particularly SEO query:

- pages in which a user can transact;

- blogs, reviews and other informational content;

- or pages from a particular site.

There are many other types. For instance:

- topics for which “Ultimate Guides” rule,

- topics with lots of listicle answers

- topics with only product pages

- topics in which pages with maps always rank

However, these are only implicit expectations about the types of results that deserve to rank. There is another kind of implicit intent: domain relevance.

Why is domain relevance useful in AI for SEO?

I recently broke one rib and bruised the rest of them when a car opened its door too quickly and my bike slammed into it. So, it’s funny to see the big difference in results with small changes in the search query, like bruised rib, ribs near me, rib for hire, or ribbed top. These differences in search engine results stem from different domains. Words in a domain share a similar meaning, even when they don’t share any of the actual words. And very similar keywords often have completely different domains. We can AI to learn how similar keywords are.

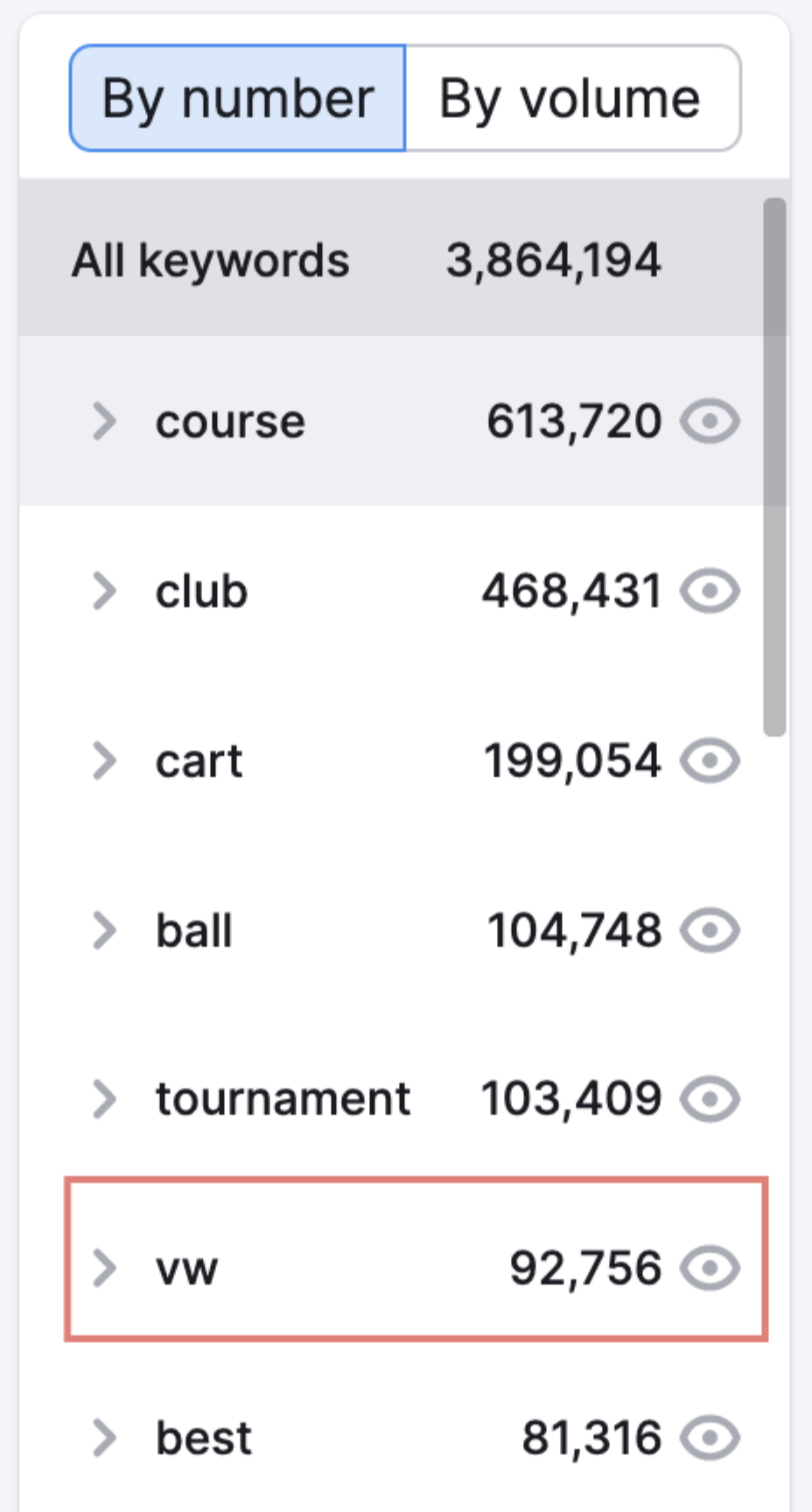

If we search on SEMrush or Ahrefs for competitive keywords for golf we will find keywords both related to cars and to sport:

Again, we are seeing that two keywords with very similar characters might have very different meanings — like golf 1.2 or golf cart. That’s because keyword tools just match using software, not AI.

Another favourite of mine: if you search for “internal linking” in your favourite keyword tool, you’ll see both keywords like internal link, internal links, internal linking, internal link structure, internal linking tool… and international links melreese country club and internal temp of sausage links. Ngram analysis will only get you so far! Matching to the words in the keyword won’t help. We need a way to understand the domain hidden behind that.

How is disambiguation used in AI for SEO?

Recently an SEO friend asked he could work out how to filter out irrelevant results for his bike shop client as he went through different related bike queries: bicycle → best bike 2022 → trek → star trek. He asked what he could use to work out that one of those was more 👾 than 🚴♀️. In AI, this problem is called disambiguation and for product growth teams, it’s both a tough one and a useful one.

One approach is to find a way to take any keyword and map it to a general category in order to disambiguate it. Relevance is a very important part of identifying new keywords, topics and pages which could be a part of a site, but are not yet. If you’re considering how to automate creating category pages for your site, you need a way to identify the domain.

Training AI for SEO using Google Search Console data – without labelling data

There are some different ways to identify domain relevance from a keyword. We use a number of different ones to build our grid of SEO data to help teams automate their SEO strategy through actionable page-level decisions. We give you access to SERP intent, to match the words used in the keywords which form a topic, and we use machine learning classifiers to identify the implicit domain.

It’s common to manually label data for an AI classifier. In the past, I (Robin here), spent a lot of time with our team, building out a workflow to manually label data to build up our set of training data. We used multi-label data. It was a pain for a bunch of reasons:

- sometimes the labellers got it wrong

- sometimes we needed to change our ontology of labels

- and mostly it was just very hard to scale.

We found an alternative approach that works a lot better. For this post, instead we will focus on how to build a category classifier using keyword data from Google Search Console (GSC).

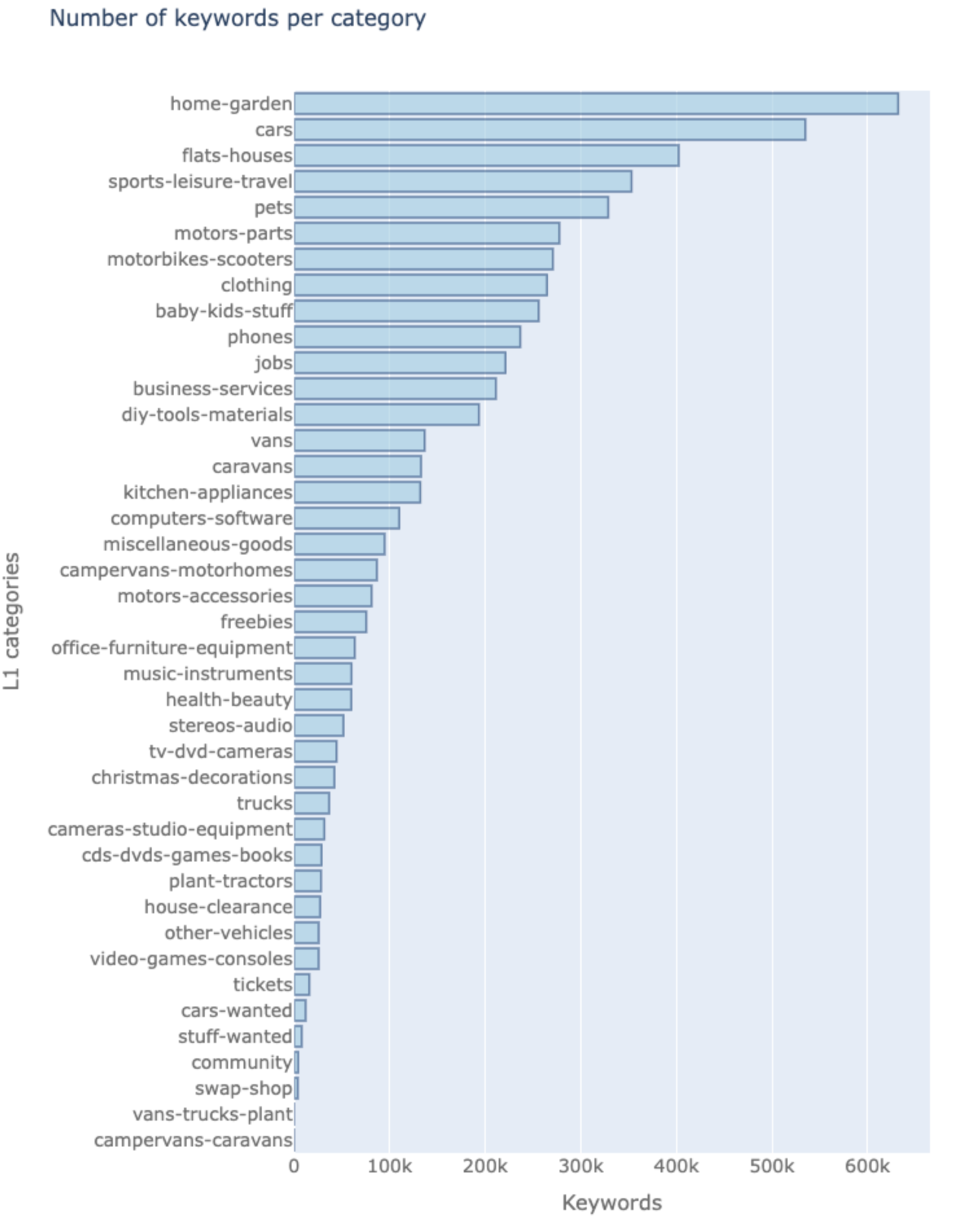

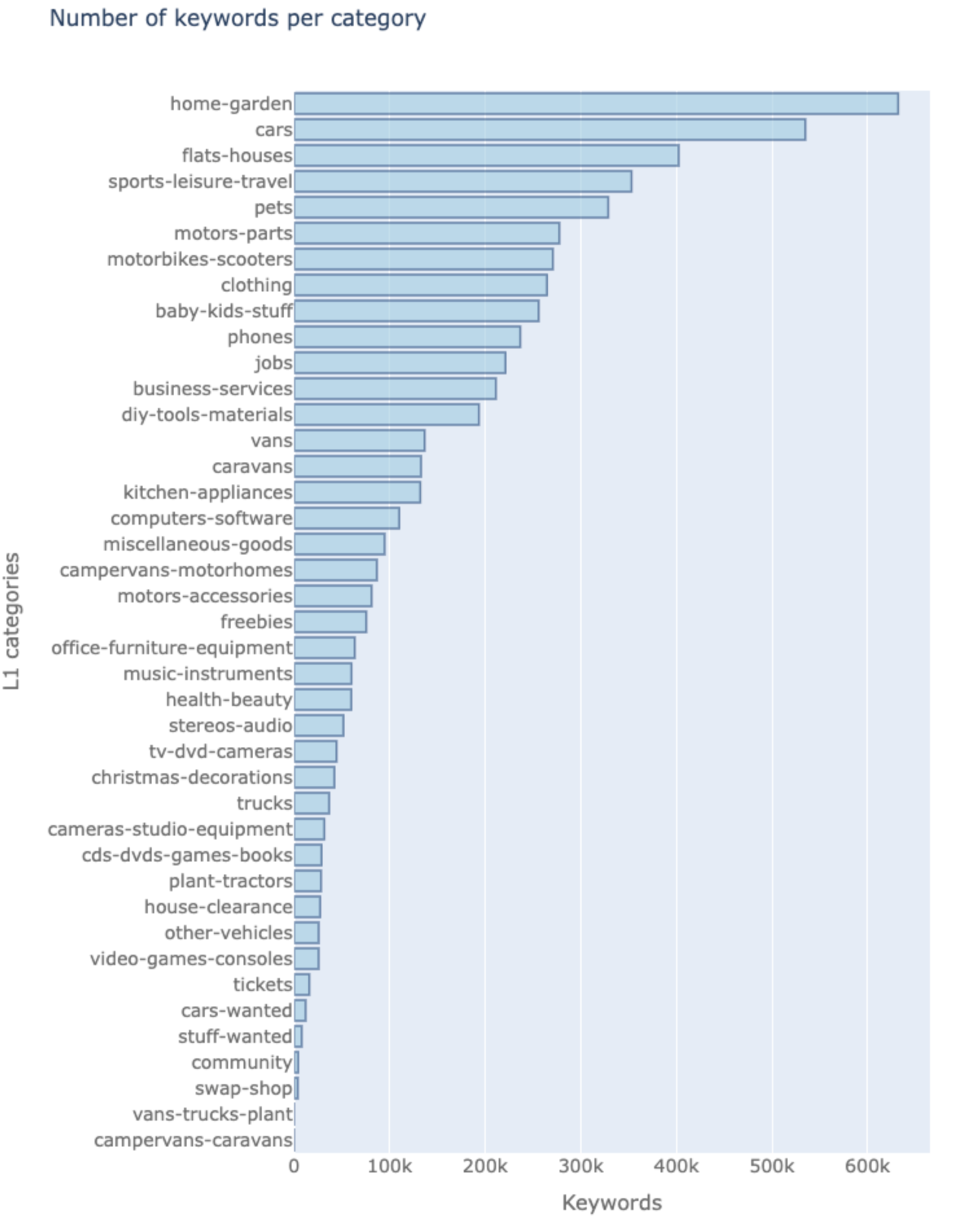

Ranking keywords and the pages they rank for a large site (we’ll call our example largesite.com) are sourced from our large scale repository of GSC data. Every site has a fixed set of categories that form a browse taxonomy representing the navigational hierarchy of the pages on the site. Attributing them as levels L1, L2, L3… and so on with depth, we get an idea of the graph depth the sitemap entails. For largesite.com, we find 7 L1s, 166 L2s, 1,340 L3s, 865 L4s, and 199 L5s to begin with.

But for domain relevance, the taxonomy at play need not necessarily map with these category levels. In this context we would like parent L1s that encompass all L2 topics semantically (and so forth) and not just the immutable browse taxonomy. So, even if the browse taxonomy dictates red-audi-cars and audi-cars to be on the same level, we can transform them to L1: audi-cars and L2: red-audi-cars which is a semantically better representation. To this effect, a proprietary category mapper is used to abstract out L1 categories from the page URLs. After preprocessing the data and deduping the keywords, we end up with 5.6 million keywords with category labels.

Note that the taxonomy of the site limits the applications of the AI classifier that we build. For instance, this site doesn’t have any food & drink or restaurant sections. As a result keywords like spare ribs or banana don’t get classified well. This is a problem you would have if you had manually labelled your data too. However, because we are using a proprietary category mapper, we could map another site’s keywords which ranks for restaurants and food queries to another category, and re-run everything. This approach to training using programmatically generated training data has been called Software 2.0. Alongside not needing hand-labelled data, one of its benefits is this ability to quickly iterate on taxonomies of labels.

Although the AI classifier is limited by the site, the SEO keywords are not. One of the amazingly powerful benefits of this approach is that it works with literally any keyword you can think of — and many which you can not. Given that the vast majority of keywords are long-tail keywords which have never been searched for before, we need classifiers to make sense of them. Another benefit of using classifiers is that you don’t need to look at the SERP each time you do a look-up. Although there is up-front cost to training, at inference time, these can be cheap to run. (You do need to be careful how large a model you use of course).

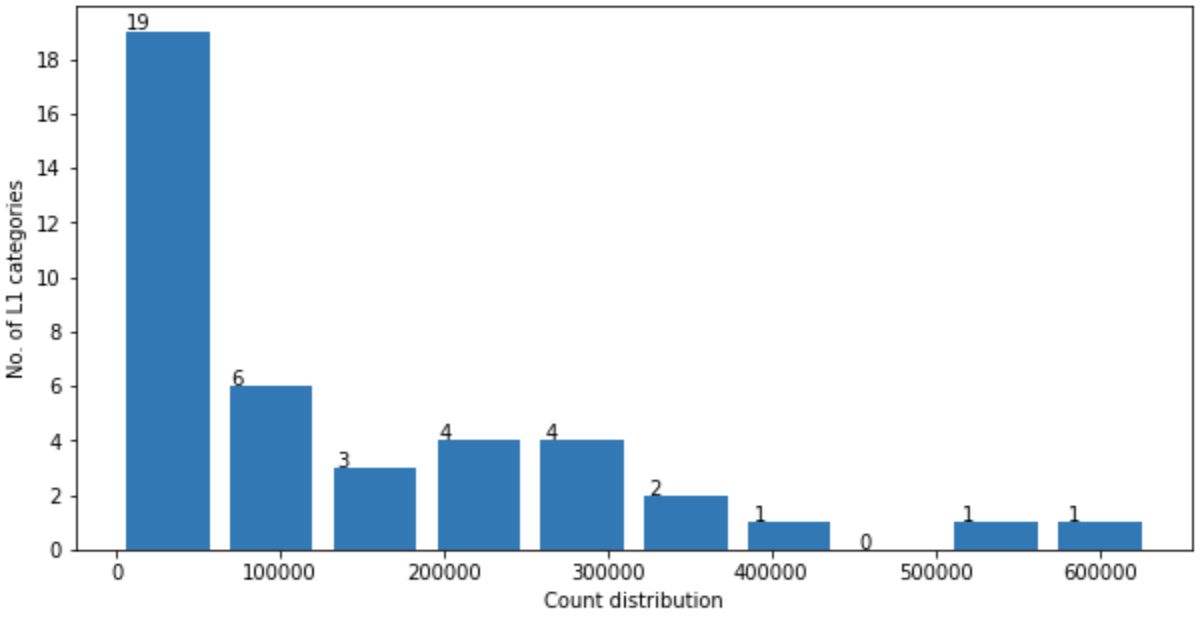

A quick look at the distribution of the keywords across 41 categories, we see a typical imbalance with the lightest category having 16 keywords and heaviest 631k keywords.

To avoid bias to one category or another in the model, we need to have a reasonably well-spread stratification of categories for modelling. A simple approach is cap the sample rate from each category. Looking at the distribution of the category-wise counts , 50,000 keywords per category seems doable.

Having sampled 50k keywords per category, we make a 80-20 train test split across the whole dataset. Our training data with 1.26M keywords and testing data with 300k keywords is ready.

Take a look at those numbers again: 1,260,000 keywords with which to train is a huge number. A good rule of thumb in AI is that, all other things being equal, the more data, the better the results. In SEO, you have a lot of data available — use it!

The downside of this automatically labelled data is that it can be noisy. Noisy is an AI term meaning that the data we’re using to train the model is wrong, on occasion. Much like the old joke about advertising, that 50% of it works but you just never know which 50% (and if that’s still your experience, you should consider using our no-code enterprise SEO tool to scale organic revenue), noisily labelled data is wrong 5-10% of the time, but we just don’t know when. For now, we are going to let the model handle this. However, there are some useful approaches to automatically find the most useful training data, by using an understanding about where the noise comes from, called ‘weak supervision’. Weak supervision is part of the Software 2.0 approach This Snorkel article is a little old, but a great introduction to weak supervision.

Which text classification machine learning models should we use to train AI for SEO keywords?

Our team have tested out a number of different AI models for training SEO keyword classifiers. It’s common in the SEO community to use very large language models, such as BERT or GPT-3. These are powerful for tasks such as natural language generation (NLG) and can capture the nuance inherent in language for multi-paragraph bodies of text. Some of that power comes from the recurrent nature of the neural network architecture used to train that. But that power also comes at a cost: these models are hugely expensive to train. They can also be relatively expensive to run at inference time, i.e. when you want to classify a keyword.

Facebook’s fastText is a fast, scalable, robust and efficient text classification library that also provides pretrained vectors for easy representation learning from texts. A vector is a point in n-dimensional space. Often text representation vectors are 300-dimensional. If your eyes just crossed over when we started talking about dimensionality… it’s actually not that hard. A two-dimensional vector is a point you can pick on a graph with an x and y axis — think of a square. You can write it down as something like (0.1, 0.3). A three-dimensional vector is a point you can pick with an x, y and z axis — think of a cube. You can write that down as something like (0.1, 0.3, 0.9). Most of your intuitions about how to think about points on squares or cubes translate to 300 dimensions too. But then you’ll be writing down three hundred numbers (0.1, 0.3, 0.9, … ). If you think of a vector as a point in a square or a cube, you’ll be in a great starting place. Most of the work in text representation is talking about how far away one vector is from another or which direction one vector is from another. If you’d like an approachable introduction to thinking in more than three dimensions, you could start with the book, Flatland (enjoy the wit and mind-expanding exercises in thinking in one more dimension, but please excuse the sexism).

These vectors represent text in a variety of interesting ways. Typically they give a deeper understanding of the meaning of the words. Keywords meaning something similar will have vectors which are closer together. Those extra dimension come in useful because often there are lots of layers of meaning hidden in those very few characters.

We use the 2 million word vectors trained on Common Crawl (about 600 billion tokens and their neighbourhood representations) to generate features from the keywords in the training data. Using these existing word vectors is a very convenient way to start with artificial intelligence for SEO.

FastText is partly powerful because it uses subwords rather than just words. “Subwords” are substrings of words. For instance, the word “subwords” contains substrings such as “sub”, “words”, “ubwo” and “ords”. The magic of subword-trained AI classifiers is that they can produce vectors for completely new words that they haven’t been trained on. In natural language processing (NLP) we call these “out of vocabulary” items or OOV. In the long-tail world of AI SEO optimisation, the majority of keywords are keywords that you won’t have seen before. That means the majority of keywords are out of vocabulary. But long keywords are mostly made up of other keywords. And many of the words are made up of other substrings. So, subwords are very powerful feature for keyword classification artificial intelligence models.

FastText is also more useful for us because it’s very efficient. One of the goals of FastText is that you can train it on a CPU, not just GPUs or more powerful hardware. That means that it’s really very cheap, fast and efficient to train and run. In turn, that means that we can use our AI to classify billions of keywords easily to find which are really similar.

How do we train our AI model to automate keyword research?

The fastText deep learning model is trained on the prepared data with bigram and trigram word specifications. This means the model is able to associate 2-word or 3-word chains with respective categories e.g. if the model has learnt 'window cleaner rod'-→diy-tools-materials and 'lawn mover for rent'-→ business-services, it will be able to map 'window cleaner for rent'-→business-services having learnt for rent and window cleaner bigrams.

The evaluation metrics on the testing data are encouraging for the scale of diverse keywords used and the non-trivial number of categories.

golf passat → ['cars', 'motors-parts', 'motors-accessories']

golf → ['sports-leisure-travel', 'cars', 'motors-parts']

gulf passat → ['cars', 'motors-parts', 'motors-accessories']

ribs → ['sports-leisure-travel', 'clothing', 'baby-kids-stuff']

spare ribs → ['sports-leisure-travel', 'motors-parts', 'baby-kids-stuff']

ribs for sale → ['sports-leisure-travel', 'clothing', 'baby-kids-stuff']

ribs for rent → ['clothing', 'sports-leisure-travel', 'baby-kids-stuff']

ribs for sale in london → ['sports-leisure-travel', 'house-clearance', 'freebies']

michellin → ['motors-accessories', 'sports-leisure-travel', 'other-vehicles']

michellin chef knife → ['jobs', 'kitchen-appliances', 'clothing']

michelin tire → ['motors-accessories', 'motors-parts', 'freebies']

banana → ['sports-leisure-travel', 'health-beauty', 'freebies']

banana republic → ['freebies', 'baby-kids-stuff', 'sports-leisure-travel']

name it → ['clothing', 'tickets', 'other-vehicles']

summer chairs → ['home-garden', 'freebies', 'office-furniture-equipment']

This application of classification models on search console data is a stepping stone to lots of other use cases. Once your product engineering team has code that understands how similar keywords actually, there are a lot of powerful use cases. While the keywords used here are only from a single market (the UK), it is possible to make classifiers for various markets that learn the user search patterns that are locally flavored. We could also use different pretrained word vectors or subword vectors to be able to account for spelling errors creeping into search console data and other out-of-vocabulary problems.

In this example, we got our data from Google Search Console API, but any source of keyword & site data is suitable. You just need to be able to extract a category intent from the site data. For example, you might consider competitive keywords from SEMrush or competitive SERP crawls, perhaps using breadcrumbs. And if you’re stuck on how to get lots more keyword data from GSC, look no further: we can help you get 10x the number of keywords and avoid all Google Search Console API limits.

If you’re interested in what you can automate when you have a holistic system of record for every page on your site, with all the SEO data you need – reach out, we’d love to have a chat.